How to Prevent Google from Indexing Your Exploratory Collaboration Server

If you set up your Exploratory Collaboration Server on a host that is accessible from the external internet, you might want to prevent Google from indexing its pages. To do so, you can configure the Collaboration Server to add X-Robots-Tag HTTP header to the pages it serves. Here is how to.

1. Shut down Collaboration Server

Shut down Collaboration Server. Go to the directory created when you expanded the distribution file of Exploratory Collaboration Server (by default, it is named exploratory.) and issue the following command.

docker-compose down2. Edit the Nginx configuration file (default.conf)

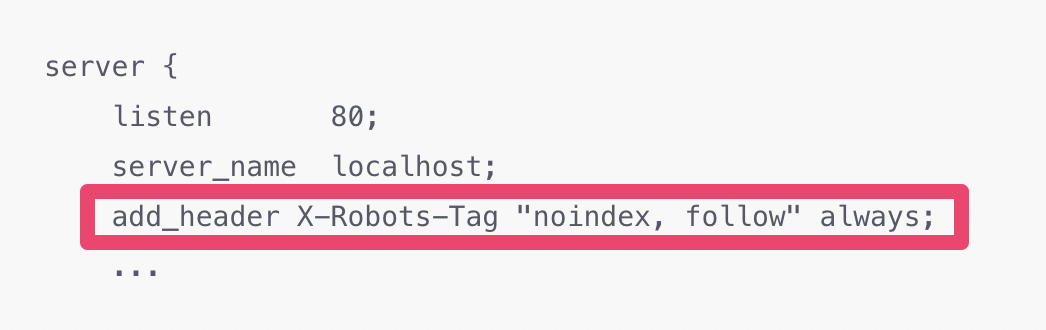

default.conf file under the exploratory directory is the configuration file for the web server (Nginx) of Exploratory Collaboration Server.

Open the default.conf and add the following line under the server section of it.

add_header X-Robots-Tag "noindex, follow" always;Example of default.conf after adding the line:

3. Restart Collaboration Server

docker-compose up -dNow, Collaboration Server is up and will add X-Robots-Tag header to the pages it serves, which tells Google not to index the page.